What really changes when developers intend to improve their source code: a commit-level study of static metric value and static analysis warning changes

This website accompanies the publication and provides additional material.

What?

We manually classified 2533 commit messages as quality improving for either internal quality, external quality or neither.

Internal quality (perfective changes) improves formatting, complexity or structure of the source code while external quality (corrective changes)

fixes defects. We used a set of guidelines to enable replication of our manual classification.

We then used this data to first evaluate and then fine-tune a state-of-the-art deep learning model for natural langauge processing used in previous work.

This model yielded good performance in automatically classifying commits, therefore we use it to classify the rest of our data which increases the number of commits we can use in our analysis to 125,482.

We extract a range of static source code metrics that were collected as part of the SmartSHARK project.

We provide data for all metrics in the form of deltas (what changed in the commit) as well as current and previous values, e.g., McCabe Complexity decreased by 2 in commits that improve internal quality.

On this page we provide the plots and tables available in the publication. Additionally, we provide the data for only the ground truth and a slightly different dataset where only changed files (excluding pure additions and deletions) are included.

Why?

We want to know what changes in commits which increase the quality of the source code or which fix a bug. More importantly, we want to know what changes in comparison to all other commits, e.g., feature additions. This is quite resource intensive as we have to collect a range of metrics not just for commits we are interested in but for all other commits also.

Software quality models are using static source code metrics to measure quality of source code. Defect prediction models use static source code metrics (and in some cases process metrics) to build models that predict defects in files or changes. This hints at the importance of static source code metrics and their connection to source code quality.

Previous research provides certain assumptions, e.g., that complexity is reduced when source code quality increases or that comment density should increase.

Research published at ICSE 2021 that used fMRI scans of developers understanding source code showed that "traditional" complexity metrics like McCabe Complexity might not be a good measure for code complexity as experienced by developers.

Having a wealth of open source software projects which we can use to extract information we can take a look at what really changes when developers intent to improve the quality of their codebase.

Our work provides insights into what static software metrics change when developers describe their change as quality improving.

How?

We use a publicly available database dump from the SmartSHARK project that provides commits and a wide range of static source code metrics via OpenStaticAnalyzer.

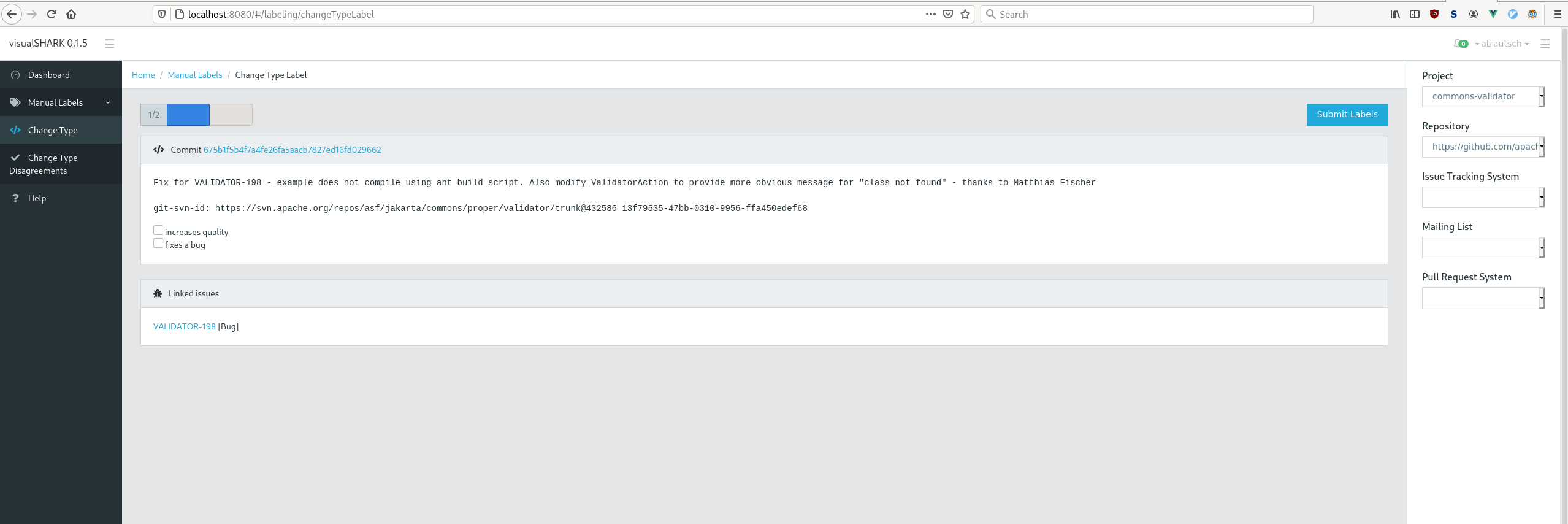

To create manual labels that annotate whether a commit is intended as perfective or corrective change we use a web application that provides a view for two researchers to label the changes independently.

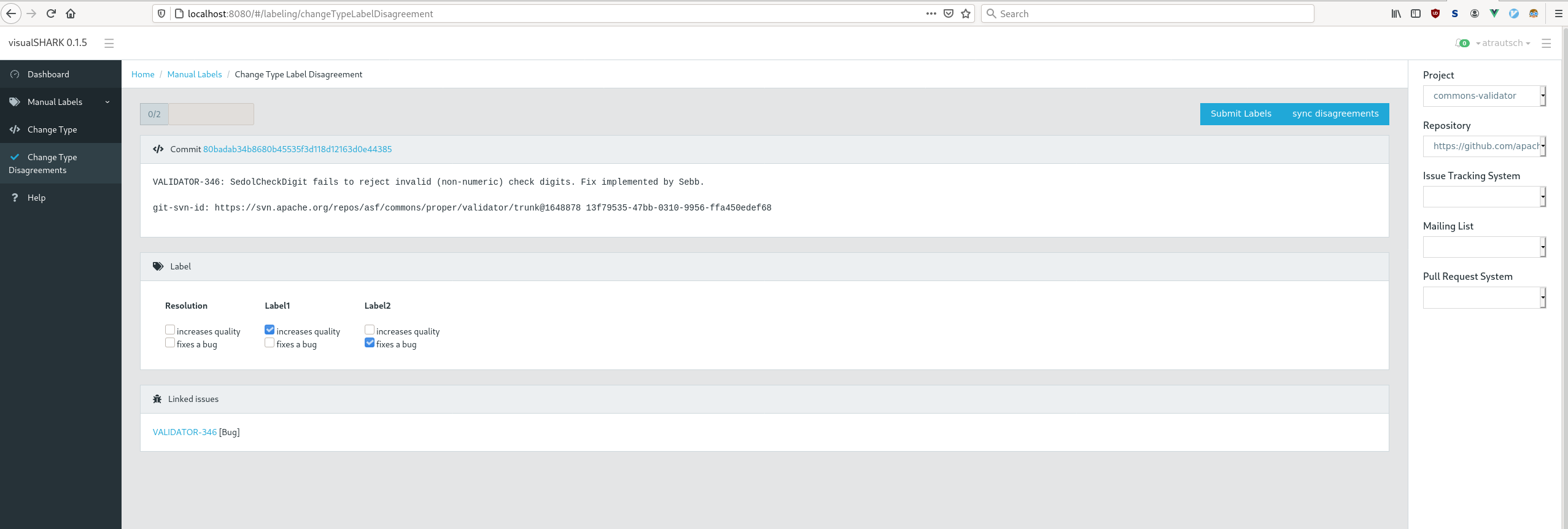

In case of a conflict between both researchers the user interface provides a conflict resolution view with all information and anonymized previous labels in random order.

We use the manual classifications as ground truth to fine-tune a deep learning model pre-trained on software engineering data. This model is used to classify the rest of our data.

Results

We confirm prior studies findings that perfective and corrective commits are smaller than other commits. We find that perfective commits impact static source code metrics in a positive way, e.g., complexity goes down. Corrective commits are more complex, complexity rises instead of going down after the bug fix. While we did not expect complexity to decrease, the comparison is against all other non corrective changes, which includes feature additions.

As part of an exploratory study we find that corrective changes are applied to files which have a higher median complexity than other files before the change, which is expected as these are bug fixes. However, we also find that perfective changes are applied to files which median complexity before the change is already low. This is also consistent for all other metrics, e.g., comment density is already higher, coupling between objects is already lower.